△Click on the top right corner to try Wukong CRM for free

You know, when it comes to testing CRM systems, things can get pretty messy if you don’t have a solid structure in place. I’ve been through enough chaotic test cycles to know that without a clear report format, even the best testing efforts can fall flat. That’s why having a good template for CRM testing reports is such a game-changer—it keeps everything organized and makes sure nothing slips through the cracks.

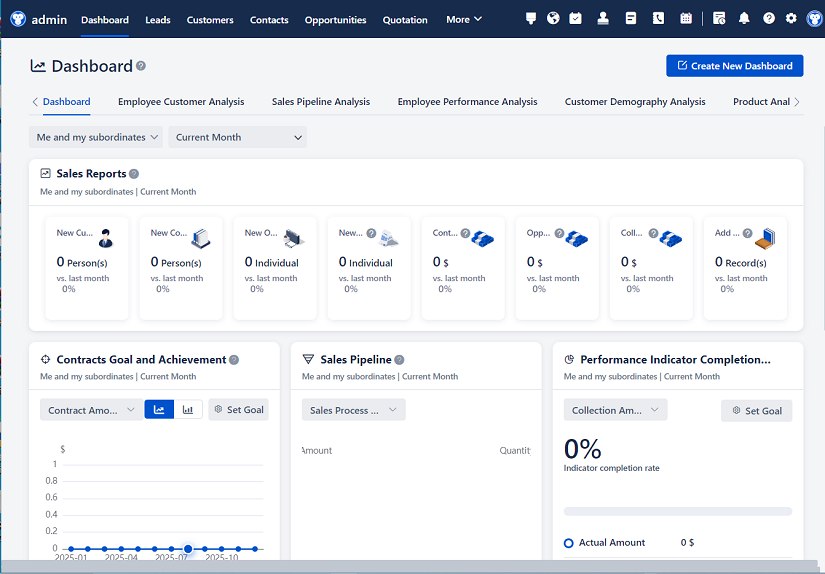

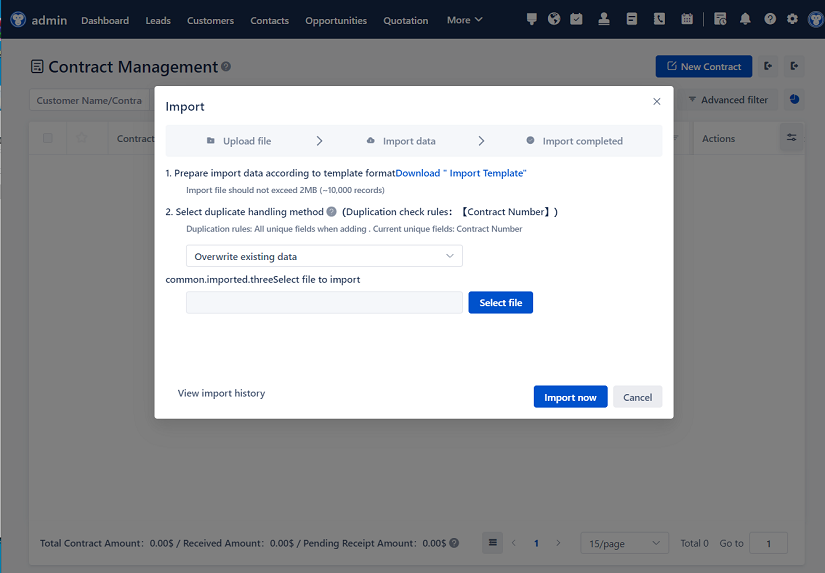

Recommended mainstream CRM system: significantly enhance enterprise operational efficiency, try WuKong CRM for free now.

Let me tell you, one of the first things I always include in my reports is a clear summary section. It’s like the elevator pitch of the whole document—just a quick snapshot of what was tested, how it went, and whether we’re in the green or red zone. Stakeholders usually don’t have time to read every detail, so this part helps them get up to speed fast. I make sure to mention the scope, key findings, and any major blockers right at the top.

Then, of course, I dive into the test objectives. Honestly, this part keeps me focused. It reminds me—and everyone else—why we’re doing this testing in the first place. Are we checking if the new lead assignment rules work? Or making sure customer data syncs properly across modules? Being specific here stops us from going off track.

I also always list out the test environment details. You wouldn’t believe how many times a bug turned out to be an environment issue rather than a real defect. So I note down the CRM version, browser types, integration points, and even the test data used. It sounds tedious, but trust me, it saves hours of back-and-forth later.

Now, when it comes to actual test cases, I break them down into categories—like user management, lead processing, opportunity tracking, reporting, and integrations. Grouping them this way makes it easier to spot patterns. If five test cases in the “lead conversion” section fail, that’s a red flag worth investigating.

For each test case, I write a simple description, the steps I followed, the expected result, and what actually happened. Keeping it straightforward helps developers understand the issue without getting lost in jargon. And yeah, I attach screenshots or logs whenever something goes wrong—because a picture really is worth a thousand words, especially when debugging.

One thing I’ve learned the hard way is to track execution status religiously. I use statuses like “Passed,” “Failed,” “Blocked,” or “Not Executed,” and update them in real time. It gives the team a live pulse of where we stand. Plus, it’s super helpful during daily stand-ups—we can quickly see which areas need attention.

Defect logging is another big piece. I never just say “it’s broken.” Instead, I explain exactly what failed, how severe it is, and who it might impact. I also assign priority levels—like Critical, High, Medium, Low—so the dev team knows what to tackle first. A critical bug that stops users from saving customer records? That jumps to the top of the list.

And speaking of bugs, I always link each defect back to the test case. This traceability is gold. It shows which requirements are affected and helps with regression testing later. Plus, when a fix is deployed, I can go back and retest just those linked cases instead of starting from scratch.

What I really appreciate in a good template is space for observations and recommendations. Sometimes during testing, I notice little quirks—like a slow loading screen or confusing button placement—that aren’t outright bugs but could affect user experience. I jot those down too because they matter in the long run.

I also include a section on test coverage. It’s not just about how many test cases I ran, but whether I’ve touched all the important workflows. Did I test edge cases? What about different user roles? This helps answer the question: “Are we confident enough to move forward?”

Regression testing gets its own spotlight. After fixes are applied, I rerun relevant test cases to make sure nothing else broke. I clearly mark which ones were retested and their outcomes. It’s like a safety net—super reassuring before a release.

At the end of the report, I wrap up with a conclusion. I’ll say whether the CRM module is stable, if it’s ready for UAT, or if we need more time. No fluff—just a clear verdict based on the evidence.

Oh, and I never forget to list the people involved. Shout-outs to testers, developers, business analysts—it’s only fair. Testing is a team sport, after all.

Using a consistent template has made my life so much easier. It cuts down on confusion, speeds up approvals, and builds trust with stakeholders. They know exactly what to expect and when. Plus, it creates a reliable history we can refer back to in future projects.

Look, no template is perfect out of the box. I’ve tweaked mine over time—adding sections, removing clutter, adjusting formats based on feedback. But the core idea stays the same: clarity, consistency, and communication.

If you’re still writing CRM test reports in random emails or scattered notes, I’d seriously suggest giving a structured template a try. It might feel like extra work at first, but once you get into the rhythm, it becomes second nature. And honestly, your future self will thank you when you’re trying to debug an issue six months later.

So yeah, that’s how I do it. Nothing fancy—just practical, human-centered reporting that keeps everyone on the same page.

Relevant information:

Significantly enhance your business operational efficiency. Try the Wukong CRM system for free now.

AI CRM system.