△Click on the top right corner to try Wukong CRM for free

You know, I’ve been thinking a lot lately about how much technology has changed the way we live—especially when it comes to the systems we use every day. Like, have you ever noticed how your phone seems to “know” what you’re going to search for before you even type it? Or how Netflix recommends shows that feel like they were made just for you? That’s not magic—it’s personalization in information systems, and honestly, it’s kind of wild when you really think about it.

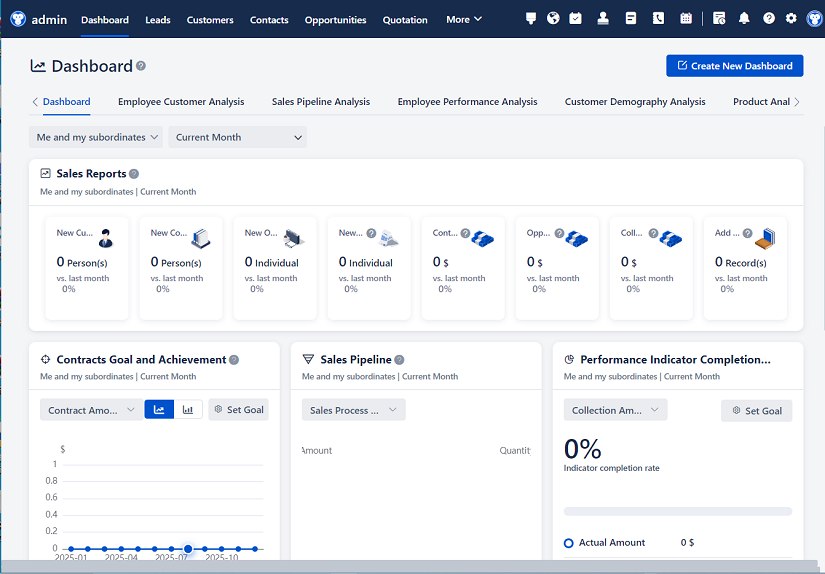

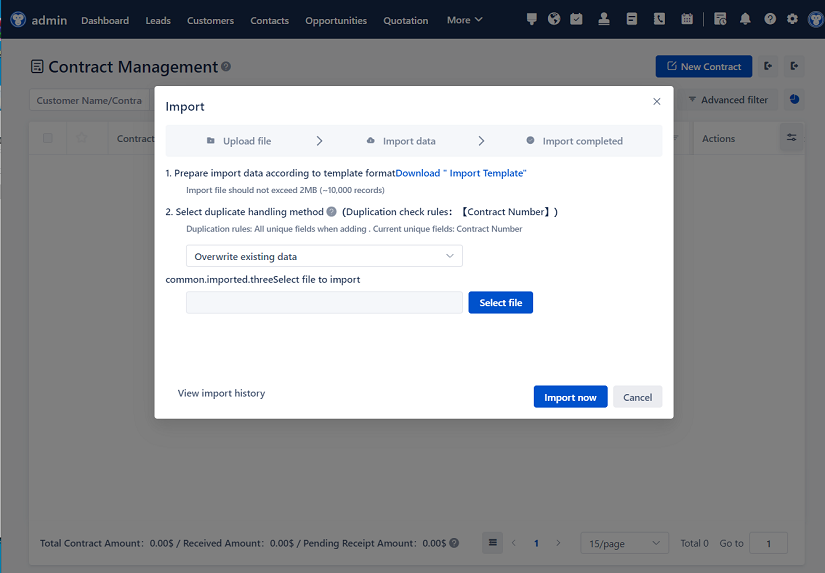

Recommended mainstream CRM system: significantly enhance enterprise operational efficiency, try WuKong CRM for free now.

I mean, back in the day, if you wanted to find a movie to watch, you’d probably rent a DVD or flip through cable channels. Now? You open an app, and within seconds, there’s a whole row labeled “Because you watched…” It feels almost too convenient, right? But behind that convenience is a massive network of data collection, algorithms, and machine learning models working overtime to tailor your experience.

And it’s not just entertainment. Think about online shopping. The last time I bought running shoes, I didn’t even have to search for them again—the next day, ads for socks, water bottles, and fitness trackers started popping up everywhere. Creepy? Maybe a little. Effective? Absolutely. That’s personalization at work, using your past behavior to predict what you might want next.

But here’s the thing—I’m not sure everyone realizes just how much of their digital life is being shaped by these systems. Every click, every scroll, every pause on a product page—it’s all being recorded. And while some people might say, “Well, I’m not doing anything wrong, so why should I care?” I think it’s worth asking: who really owns that data? And more importantly, how much control do we actually have?

Let me tell you, from my own experience, once you start paying attention, it’s hard to unsee. I remember one time I was talking to a friend about hiking boots—just casually, over coffee—and later that evening, I opened Instagram and boom, there was an ad for hiking gear. Now, was that coincidence? Maybe. But given how often this happens, I’m starting to believe our devices are listening. Whether it’s through voice assistants, background apps, or cookies tracking us across websites, the level of personalization today feels both impressive and… unsettling.

Still, I can’t deny how useful it is. I used to spend ages comparing flights and hotels when planning trips. Now, travel sites show me options based on where I’ve gone before, my budget range, even the time of year I usually travel. It saves me so much time. Same with news apps—they learn what topics interest me and push those stories to the top. No more wading through articles about politics when all I want is the latest tech update.

But then again, that raises another concern: are we missing out on things we should see, just because the system assumes we won’t like them? Like, what if I never get exposed to different viewpoints or new interests because the algorithm keeps feeding me the same stuff? It’s like living in a bubble, and I don’t know if that’s healthy.

I talked to my cousin about this once—he works in IT—and he told me that personalization isn’t just about showing you what you like. It’s also about increasing engagement. Companies want you to stay on their platform longer, click more ads, buy more stuff. So they design these systems to be addictive, in a way. The more personalized the content, the more likely you are to keep scrolling. And honestly? He’s not wrong. How many times have you said, “Just five more minutes on TikTok,” and suddenly it’s two hours later?

It makes me wonder—do these systems understand us better than we understand ourselves? I mean, sometimes I look at my recommended playlists and think, “Wow, this is exactly my mood.” Other times, I get suggestions that make zero sense. But even the misses are part of the learning process. Every thumbs down teaches the system something new.

And it’s not just consumer-facing stuff. Personalization is creeping into education, healthcare, even government services. Imagine a learning platform that adapts to your pace, focusing more on the topics you struggle with. Or a health app that reminds you to take medication based on your daily routine. That sounds amazing, right? But again, it requires access to deeply personal data. What if that info gets leaked or misused?

I remember reading about a school district that used personalized learning software, and parents freaked out because the system was collecting everything—how long kids spent on each question, their facial expressions during tests, even their mouse movements. Is that helpful insight or straight-up surveillance? I don’t know. It blurs the line.

Then there’s the issue of bias. Algorithms are built by people, and people have biases—conscious or not. So if a hiring system uses personalization to match candidates with jobs based on past hires, it might keep favoring the same types of people, reinforcing inequality. I heard about a case where an AI recruiting tool downgraded resumes with words like “women’s chess club” or degrees from women’s colleges. That’s not personalization—that’s discrimination hiding behind code.

And let’s not forget accessibility. Not everyone has the same access to high-speed internet or the latest devices. So while some people enjoy hyper-personalized experiences, others are stuck with generic, one-size-fits-all interfaces. That creates a digital divide, where personalization becomes a luxury rather than a standard feature.

Still, I think the potential is huge—if done right. Imagine a world where your doctor gets real-time updates from your wearable device, and the system alerts them if your heart rate spikes abnormally. Or a city traffic system that adjusts signals based on individual commuter patterns to reduce congestion. That’s not sci-fi; that’s where we’re headed.

But we need rules. We need transparency. I should be able to look at my profile and see what data is being used, how it’s being used, and have the option to say, “No, don’t use that.” Right now, most of us just click “Accept” on privacy policies without reading them—because let’s be honest, who has time to read 30 pages of legal jargon?

There are places trying to fix this. The EU’s GDPR law gives people more control over their data. California has its own version. But enforcement is spotty, and companies find loopholes. Plus, opting out often means losing functionality. Try using a social media site without allowing tracking—you’ll get a worse experience, slower load times, irrelevant content. It’s like punishment for wanting privacy.

I asked a friend who’s into tech ethics what she thought, and she said the real problem is consent. We don’t truly consent when the alternative is being left out of digital society. If all your friends are on a personalized messaging app and you refuse to join because of privacy concerns, you’re socially isolated. That’s not freedom—that’s coercion disguised as choice.

And yet, despite all the risks, I still use these systems. I love my music recommendations. I appreciate when my calendar suggests meeting times based on my habits. I can’t imagine going back to the old way of doing things. So maybe the answer isn’t to reject personalization, but to demand better versions of it.

What if companies were rewarded for ethical data use? What if there were clear labels—like nutrition facts—on apps showing how much data they collect and why? What if we could easily transfer our preferences between platforms, so we don’t have to retrain every new service from scratch?

I think users should own their data profiles. Like, if I spend months teaching a music app what I like, shouldn’t I be able to export that taste profile and take it to another service? Instead, companies lock that data down, making it hard to leave. That’s not user empowerment—that’s vendor lock-in.

Another idea: what if personalization wasn’t just about consumption? What if it helped us grow? Like an app that notices I’ve been watching a lot of cooking videos and suggests a local class, or reminds me to call my mom because I haven’t in a while. That kind of thoughtful nudging feels different—less manipulative, more supportive.

But we’re not there yet. Most personalization today is driven by profit, not well-being. And until that changes, we’ll keep wrestling with the trade-offs: convenience vs. privacy, relevance vs. serendipity, efficiency vs. fairness.

Still, I’m hopeful. I see younger generations questioning these systems more. They’re skeptical of big tech, they care about digital rights, they demand accountability. Maybe they’ll be the ones to reshape how personalization works—not as a tool for manipulation, but as a partner in living better lives.

At the end of the day, I think personalization should serve humans, not the other way around. It should adapt to us, not trap us. It should open doors, not close them. And yeah, it’s complicated. But if we pay attention, speak up, and demand better, I believe we can build systems that feel less like surveillance and more like support.

So next time you see a perfectly timed recommendation, take a second to wonder: how did it know? And more importantly—do you want it to know? Because the future of personalization isn’t just in the hands of engineers and CEOs. It’s in ours, too.

Q&A Section

Q: Is personalization always based on tracking my online activity?

A: Mostly, yes. Systems collect data from your searches, clicks, location, and even how long you hover over a button. Some use microphone access too, though that’s more controversial.

Q: Can I stop websites from personalizing content for me?

A: You can limit it—by clearing cookies, using private browsing, or adjusting privacy settings—but it’s hard to stop completely without giving up certain features or platforms.

Q: Does personalization make the internet less fair?

A: It can. If algorithms reinforce existing biases or only show people what they already agree with, it can deepen divides and limit opportunities for underrepresented groups.

Q: Are there laws protecting me from misuse of my data in personalized systems?

A: Yes, in some regions—like the EU with GDPR or California with CCPA—but enforcement varies, and many countries still lack strong regulations.

Q: Can personalization actually help me discover new things?

A: Sometimes! Good systems balance familiar content with smart suggestions—like Spotify’s Discover Weekly—which introduces music you might love but never searched for.

Q: Why do companies personalize so much?

A: Because it works. Personalized content keeps users engaged longer, increases sales, and helps companies stand out in crowded markets.

Q: Is there a way to personalize without sacrificing privacy?

A: Researchers are exploring “federated learning,” where data stays on your device and only insights (not raw data) are shared. It’s promising, but not widely used yet.

Q: Do I have to accept all tracking to use modern apps?

A: Often, yes—but you can choose alternatives that prioritize privacy, like search engines that don’t track you or apps with transparent data policies.

Relevant information:

Significantly enhance your business operational efficiency. Try the Wukong CRM system for free now.

AI CRM system.